Domain-specific LLM

Some Explanations

Domain-specific LLM is a general model trained or fine-tuned to perform well-defined tasks dictated by organizational guidelines. The term “domain” in this context refers to a specific area of knowledge or expertise, such as medicine, finance, law, technology, or any other specialized field.

Reasons for building domain-specific LLMs

Large Language Model (LLM) has gained popularity and achieved remarkable results in open-domain tasks, but its performance in real industrial domain-specific scenarios is average due to its lack of specific domain knowledge. The phenomenon of hallucination often occurs when general large language models solve domain-specific problem, which greatly limits their performance. Besides, LLMs are difficult to apply common sense to distinguish right from wrong like humans do. The overall purpose of building domain-specific LLMs is to make the model more adept at understanding and generating text relevant to that particular domain.

Some Models

- Medicine : Med-PaLM, ChatDoctor, …

- Law : ChatLaw, LaWGPT, …

- Finance : BloombergGPT, FinGPT, …

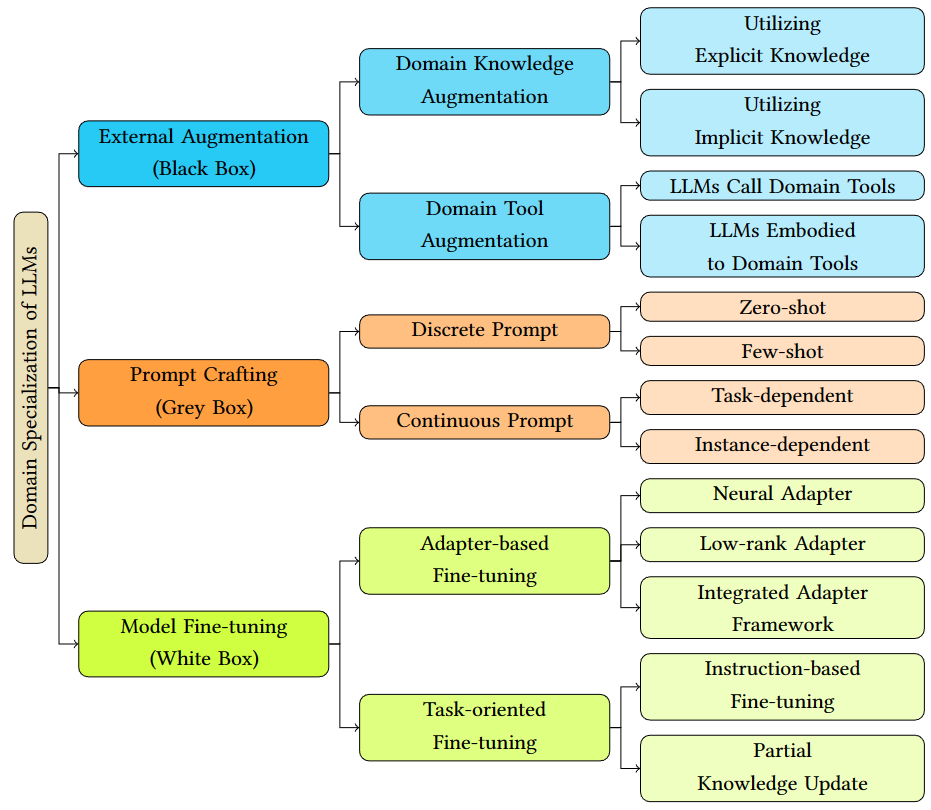

Build Domain-specific LLM

External Augmentation

Domain Knowledge Augmentation

Augmenting language models with relevant information retrieved from various knowledge stores has shown to be effective in improving performance. Using the input as query, a retriever first retrieves a set of documents (i.e.,sequences of tokens) from a corpus and then a language model incorporates the retrieved documents as additional information to make a final prediction. This integrated approach utilizes a retrieval-based method to teach the model domain-specific language knowledge, making it capable of understanding and responding to user queries within a specific industry or field.

Domain Tool Augmentation

One way for domain tool augmentation is to allow LLMs to call domain tools. By endowing LLM with the ability to use tools, it can access larger and more dynamic knowledge bases. LLM generates executable commands for domain tools and processes their outputs. By providing search technology and access to databases, the functionality of LLM can be expanded to cope with larger and more dynamic knowledge spaces. LLMs can also be called by domain tools to serve as smart agents in interactive

environments, i.e. LLMs embodied to domain tools.

Prompt Crafting

Pre-training on prompts can enhance models’ ability to adhere to user intentions and generate accurate and less toxic responses. The use of prompts plays a crucial role in guiding the content generation process of LLMs and setting expectations for desired outputs.

Discrete prompts

Create task-specific natural language instructions to prompt LLMs and elicit domain-specific knowledge from the parameter space of LLMs

Continuous prompts

Utilize learnable vectors to guide the model’s content generation.instead of relying on explicit text instructions

Model Fine-tuning

By fine-tuning a pre-trained model on a domain-specific dataset, the model can learn to leverage the expertise present in the new data, adapting its knowledge to the specific requirements of the targeted domain.

Adapter-based Fine-tuning

Add task-specific adapters to the pre-trained model’s architecture. These adapters are small, task-specific modules that can be plugged into the model’s layers. During fine-tuning, only the adapter parameters are updated. This allows the model to specialize in the domain-specific task without forgetting its pre-trained knowledge.

Task-oriented Fine-tuning

During this process, both the base model and task-specific layers are updated to adapt to the nuances of the new task. Modifying the LLM’s inner parameters is beneficial to improve alignment with specific tasks and learn domain kowledge

This process is particularly useful for tailoring generic language models to perform effectively in specialized and professional domains.

References

Shi, W., Min, S., Yasunaga, M., Seo, M., James, R., Lewis, M., Zettlemoyer, L., & Yih, W. (2023). REPLUG: Retrieval-Augmented Black-Box Language Models. ArXiv, abs/2301.12652.

Wang, Z., Yang, F., Zhao, P., Wang, L., Zhang, J., Garg, M., Lin, Q., & Zhang, D. (2023). Empower Large Language Model to Perform Better on Industrial Domain-Specific Question Answering. ArXiv, abs/2305.11541.

Ling, C., Zhao, X., Lu, J., Deng, C., Zheng, C., Wang, J., Chowdhury, T., Li, Y., Cui, H., Zhang, X., Zhao, T., Panalkar, A., Cheng, W., Wang, H., Liu, Y., Chen, Z., Chen, H., White, C., Gu, Q., Pei, J., Yang, C., & Zhao, L. (2023). Domain Specialization as the Key to Make Large Language Models Disruptive: A Comprehensive Survey.